What is the prior?

Prior is a probability calculated to express one's beliefs about this quantity before some evidence is taken into account. In statistical inferences and bayesian techniques, priors play an important role in influencing the likelihood for a datum.

Black Swan Paradox

Theory of black swan events is a metaphor that describes an event that comes as a surprise, has a major effect, and is often inappropriately rationalized after the fact with the benefit of hindsight. The term is based on an ancient saying that presumed black swans did not exist – a saying that became reinterpreted to teach a different lesson after black swans were discovered in the wild.

Such events will have huge impact while training bayesian classifiers - especially, naive bayes where the product of probabilities just turn 0. To avoid blindly rejecting a data point, we use the prior probability to move ahead.

Prior and Bayes Theorem

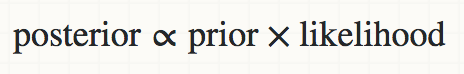

Bayes theorem, which is the probability of a hypothesis given some prior observable data, relies on the use of prior (P(H) alongside the likelihood P(D|H) and marginal likelihood P(D) in order to calculate the posterior P(H|D). The formula for Bayes theorem is:

![]()

where D represents the data and H represents the model/hypothesis.

Bayes theorem is a fundamental theorem in machine learning because of its ability to analyze hypotheses given some type of observable data. Since an agent is only able to view the world via the data it is given, it is important that it can extract reasonable hypotheses from that data and any prior knowledge. With the prior, an agent is able to determine the validity of its hypothesis based on previous observations rather than just the likelihood that it may happen. This helps the agent make informed decisions as it trains.

Rules of Bayesian Learning

- If the prior is uninformative, the posterior is very much determined by the data (the posterior is data-driven)

- If the prior is informative, the posterior is a mixture of the prior and the data

- The more informative the prior, the more data you need to "change" your beliefs, so to speak because the posterior is very much driven by the prior information

- If you have a lot of data, the data will dominate the posterior distribution (they will overwhelm the prior)

Example of Prior Probability

In this hypothetical example, let’s say you bumped into another person on the street somewhere in the world. What is the probability that the person you ran into was of Chinese ethnic descent? Given no other information, you might say that the probability was around 18% ( there are approximately 1.4 billion Chinese nationals out of a world population of 7.6 billion).

Now that we’ve made our best estimate at a prior probability given no other context, we’ll add the information that this event took place somewhere in the United States. With this knowledge, we would want to calculate a new probability given the population distribution of ethnically Chinese people living in the United States. In other words, we had to update our prior probability (unconditional) given a new condition (being in the United States), to receive a posterior probability (conditional based on new evidence).

For a more detailed mathematical representation of the prior probability and how to calculate it, see the Bayesian inference page.